last modified July 27, 2020

Python BeautifulSoup tutorial is an introductory tutorial to BeautifulSoup Python library.The examples find tags, traverse document tree, modify document, and scrape web pages.

BeautifulSoup

- Nov 08, 2018 There are different ways of scraping web pages using python. In my previous article, I gave an introduction to web scraping by using the libraries:requests and BeautifulSoup. However, many web pages are dynamic and use JavaScript to load their content. These websites often require a different approach to gather the data.

- A really nice thing about the BeautifulSoup library is that it is built on the top of the HTML parsing libraries like html5lib, lxml, html.parser, etc. So BeautifulSoup object and specify the parser library can be created at the same time. In the example above, soup = BeautifulSoup (r.content, 'html5lib').

But we can automate the above examples in Python with Beautiful Soup module. Dos and don’ts of web scraping. Web scraping is legal in one context and illegal in another context. For example, it is legal when the data extracted is composed of directories and telephone listing for personal use.

BeautifulSoup is a Python library for parsing HTML and XML documents. It is often usedfor web scraping. BeautifulSoup transforms a complex HTML document into a complextree of Python objects, such as tag, navigable string, or comment.

Installing BeautifulSoup

We use the pip3 command to install the necessary modules.

We need to install the lxml module, which is usedby BeautifulSoup.

BeautifulSoup is installed with the above command.

The HTML file

In the examples, we will use the following HTML file:

Python BeautifulSoup simple example

In the first example, we use BeautifulSoup module to get three tags.

The code example prints HTML code of three tags.

We import the BeautifulSoup class from the bs4module. The BeautifulSoup is the main class for doing work.

We open the index.html file and read its contentswith the read method.

A BeautifulSoup object is created; the HTML data is passed to theconstructor. The second option specifies the parser.

Here we print the HTML code of two tags: h2 and head.

There are multiple li elements; the line prints the first one.

This is the output.

BeautifulSoup tags, name, text

The name attribute of a tag gives its name andthe text attribute its text content.

The code example prints HTML code, name, and text of the h2 tag.

This is the output.

BeautifulSoup traverse tags

With the recursiveChildGenerator method we traverse the HTML document.

The example goes through the document tree and prints thenames of all HTML tags.

In the HTML document we have these tags.

BeautifulSoup element children

With the children attribute, we can get the childrenof a tag.

The example retrieves children of the html tag, places theminto a Python list and prints them to the console. Since the childrenattribute also returns spaces between the tags, we add a condition to includeonly the tag names.

The html tags has two children: head and body.

BeautifulSoup element descendants

With the descendants attribute we get all descendants (children of all levels)of a tag.

The example retrieves all descendants of the body tag.

These are all the descendants of the body tag.

BeautifulSoup web scraping

Requests is a simple Python HTTP library. It provides methods foraccessing Web resources via HTTP.

The example retrieves the title of a simple web page. It alsoprints its parent.

We get the HTML data of the page.

We retrieve the HTML code of the title, its text, and the HTML codeof its parent.

This is the output.

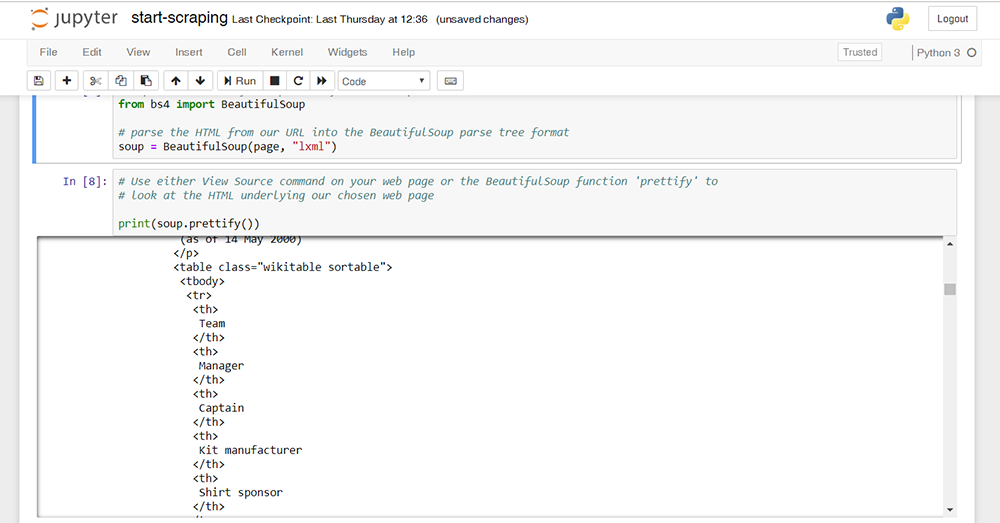

BeautifulSoup prettify code

With the prettify method, we can make the HTML code look better.

We prettify the HTML code of a simple web page.

This is the output.

BeautifulSoup scraping with built-in web server

We can also serve HTML pages with a simple built-in HTTP server.

We create a public directory and copy the index.htmlthere.

Then we start the Python HTTP server.

Now we get the document from the locally running server.

BeautifulSoup find elements by Id

With the find method we can find elements by various meansincluding element id.

The code example finds ul tag that has mylist id.The commented line has is an alternative way of doing the same task.

BeautifulSoup find all tags

With the find_all method we can find all elements that meetsome criteria.

The code example finds and prints all li tags.

This is the output.

The find_all method can take a list of elementsto search for. The binding of isaac for mac.

The example finds all h2 and p elementsand prints their text.

The find_all method can also take a function which determineswhat elements should be returned.

The example prints empty elements.

The only empty element in the document is meta.

It is also possible to find elements by using regular expressions.

The example prints content of elements that contain 'BSD' string.

This is the output.

BeautifulSoup CSS selectors

With the select and select_one methods, we can usesome CSS selectors to find elements.

This example uses a CSS selector to print the HTML code of the third li element.

This is the third li element.

The # character is used in CSS to select tags by theirid attributes.

The example prints the element that has mylist id.

BeautifulSoup append element

The append method appends a new tag to the HTML document.

The example appends a new li tag.

First, we create a new tag with the new_tag method.

We get the reference to the ul tag.

We append the newly created tag to the ul tag.

We print the ul tag in a neat format.

BeautifulSoup insert element

The insert method inserts a tag at the specified location.

The example inserts a li tag at the thirdposition into the ul tag.

BeautifulSoup replace text

The replace_with replaces a text of an element.

The example finds a specific element with the find method andreplaces its content with the replace_with method.

BeautifulSoup remove element

The decompose method removes a tag from the tree and destroys it.

The example removes the second p element.

In this tutorial, we have worked with the Python BeautifulSoup library.

Read Python tutorial or listall Python tutorials.

Today we will learn how to scrap a music web store using a Python library called Beautiful Soup. With simple, easy to read code, we are going to extract the data of all albums from our favourite music bands and store it into a .csv file.

Beautifulsoup Tutorial Python 3

It is simple, it is easy and even better, is efficient. And it is a lot of fun!

| Table of contents |

| Introduction |

| Getting ready |

| Importing libraries |

| Fetching the URL |

| Selecting elements from the URL |

| Getting our first album |

| Getting all the albums |

| Storing the albums in a file |

| Extra points! |

| Conclusion |

Python Web Scraping Beautifulsoup Examples

Introduction

If you know what Python, Beautiful Soup and web scraping is, skip to the next lesson: How to get the next page with Beautiful Soup

If you don’t, let me give a brief jump-start to you with a short, easy explanation:

- Python: An easy to learn programming language. It is one of the most used programming languages due to its easiness to learn, as it can be read like the

English language. - Beautiful Soup: Beautiful Soup is a library (a set of pre-

writen code) that give us methods to extract data from websites via web scraping - Web Scraping: A technique to extract data from websites.

With that in mind, we are going to install Beautiful Soup to scrap a website, Best CD Price to fetch the data and store it into a .csv file. Let’s go!

Getting ready

If you have used Python before, open your favourite IDE and create a new environment in the project’s folder.

If you never used Python before and what I said sounds strange, don’t panic. You don’t need to install anything if you don’t want to. Just open repl.it, click ‘+ next repl’, select Python as the project’s language and you are ready to go:

In this image, you have a white column in the middle where you’ll write the code, at your right, a black terminal where the output will be displayed and to your left, a column listing all the Python files. This script has only one file.

Importing libraries

If we had to code everything with just Python, it would take us days instead of less than 30 minutes. We need to import some libraries

We are importing:

- Requests to fetch the HTML files

- BeautifulSoup to pull the data from HTML files

- lxml to parse (or translate) the HTML to Python

- Pandas to manipulate our data, printing it and saving it into a file

If we click “Run” it will download and install all the libraries. Don’t worry, it only installs them the first time.

Fetching the URL

The first step to scrape data from an URL? Fetching that URL.

Let’s make it simple: Go to Best CD Price and search for one band, then copy the resulting URL. This is mine: http://www.best-cd-price.co.uk/search-Keywords/1-/229816/sex+pistols.html

After the importing code, type this:

Run the code and you’ll see the “Everything is cool!” message.

We have stored our URL in ‘search_url’. Using requests we used the ‘get’ method to fetch the URL and if everything is working properly, our URL is successfully fetched with a 200 status code (Success) and we print ‘Everything is cool!’ in our terminal.

Python needs to understand the code. Disgaea 2 pc digital dood edition (game + art book) crack. To do so, we have to translate it, or parsing it. Replace the last print with the following code:

We parse the page’s text, with the ‘lxml’ parser, and print the result.

Sounds familiar?

We have the whole URL stored in the ‘bs’ variable. Now, let’s take the parts we need.

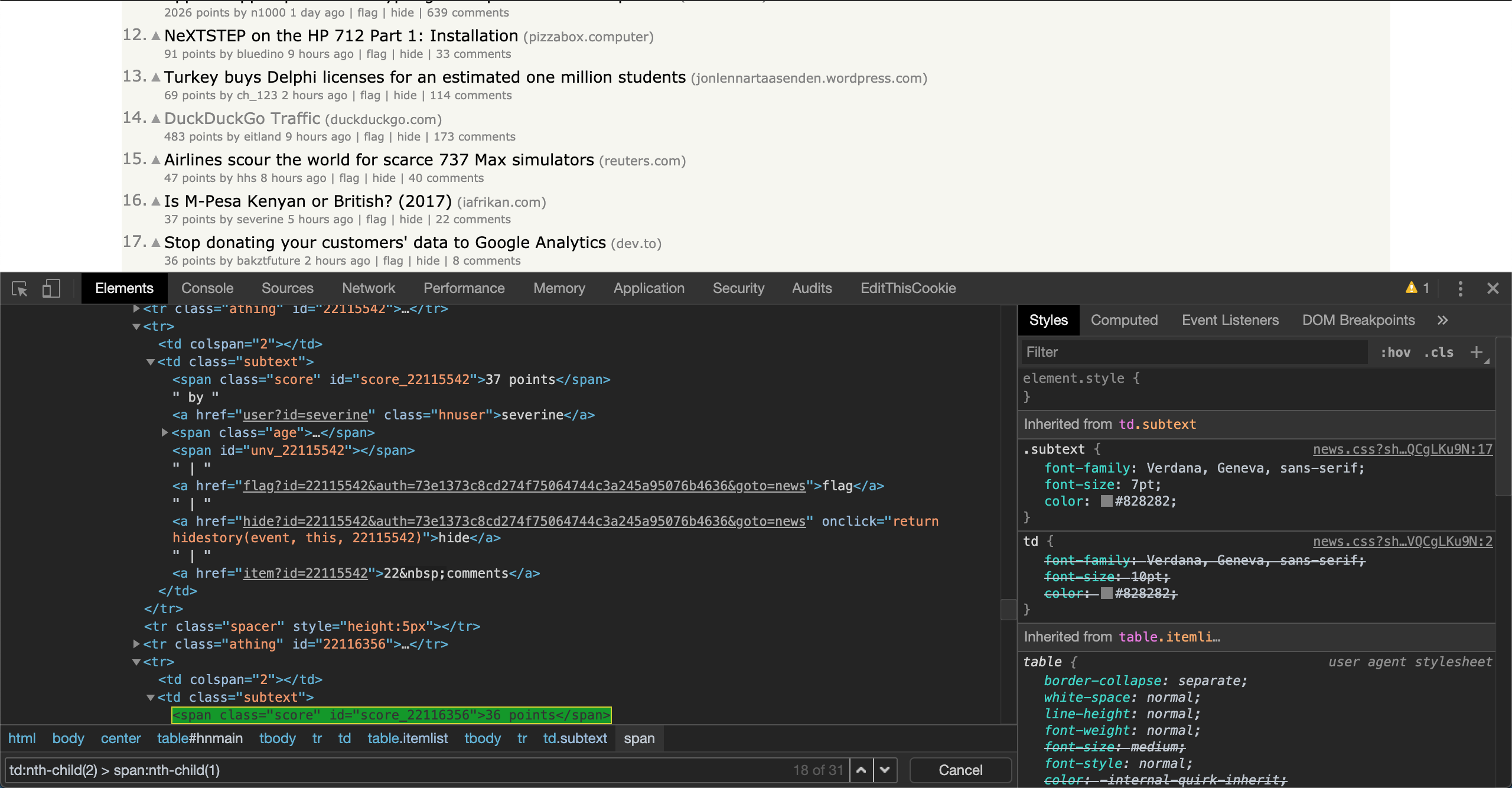

Selecting elements from the URL

Now the fun part begins!

For me, web scraping is fun especially because this part of the process. We are like a detective in a crime scene, looking for hints we can follow up.

Copy the search URL and paste it into a browser. While Chrome is recommended, it is not mandatory. Right

You are looking at the skeleton of the website: The HTML code.

You can move your cursor to an HTML tag and that part of the website will be selected.

This is the equivalent of a detective magnifying glass. By hovering over HTML tags we can tell what part we need to select.

And I found our fi

We have our website stored in the ‘bs’ variable. We use the ‘findAll’ method to find every ‘li’ tag. But as there are 192 li elements we need to reduce our scope.

We are going to fetch every li tag that also has a class ‘ResultItem’ and then, print all of them and the length of the list.

We get the whole list and ’10’, as there are 10 items in our page. It is looking good!

Getting our first album

We have a list of 10 (or less, depending of the band) albums. Let’s get one and see how we can extract the desired data. Then, following the same process, we will get the rest of them.

Remove the previous prints and type this:

After selecting all CDs, we store the first one into ‘cd’ and we print it:

You can view the same structure on the website too:

Let’s grab the information of this CD!

Following the same technique as before, we search for an ‘

Hm, we have the element, indeed. But we only need that image’s URL, that it is stored in the ‘src’ property. As a Python element, we can extract it as a normal property, using [‘src’]

Nice, we extracted the image. Let’s keep going:

Cool! With a few lines we have everything!

We keep extracting the values we need. If the element is a property of the tag as the ‘src’ or ‘href’, we use [‘href’] to extract it. If it is the text between the starting and ending tag, we use ‘.text’.

As not every album has the same properties, we try to fetch the value first and then, if it exists, we try to find the value. If not, we just return an empty string:

Some values have extra text we don’t need, as format_album or release_date. We remove that extra text with the ‘replace’ function, replacing that text with an empty string.

This was the most complicated thing of the code, but I’m sure you crushed it.

Getting all the albums

We have everything to fetch the information from one CD, now let’s do the same with every CD and store it into an object.

Replace what it is inside the ‘if page.status_code…’ statement with this

‘data’ is our object structure. We are going to add each value in each key. The name of the album, to data[‘Name’],

For each element in our list_all_cds, we are going to assign it the name ‘cd’ and run the code inside the for

After getting each value, we append (or ‘add it’) to the data value:

And now, the print. The information is there, nice!

But it is ugly and hard to read…

Remember we installed the ‘panda’ library? That will help us to display the data and something else.

Storing the albums in a file

Let’s use that panda library! Copy this at the end of the file, out of the for loop:

Pandas (or pd) give us a ‘DataFrame’ function where we pass the data and the columns list. That’s it. That’s enough to create a beautiful table.

‘table.index = table.index +1’ sets the first index to ‘1’ instead of ‘0’.

The next line creates a .csv file, with a comma as separator and sets the encoding to ‘UTF-8’. We don’t want to store the index so we set it to false.

It looks better!

But now check your left column. You have a ‘my_albums.csv’ file. Everything is stored there!

Congratulations, you have written your first scraping script in Python

Extra points!

You

But we can do better, right?

Why not asking the user the name of a band and search it. It can be done?

Of course.

Replace the old code with the new one:

Here we ask the user to enter a band, we format the name by replacing empty spaces with ‘+’ signs. This website does it when searching, so we have to do it too. Example: http://www.best-cd-price.co.uk/search-Keywords/1-/229816/sex+pistols.html

Python Web Scraping Beautifulsoup Example Html

Now, our search URL uses the formatted band name

Not mandatory, but is a better practice to have the urls at the start of the code to easily replace it.

Now the file has the name of each band, so we can create any number of files we want without rewriting it! Let’s run the code.

Conclusion

In just few minutes we have learn how to:

- Fetch a website

- Localize the element(s) we want to scrap

- Analyze what HTML tags and class we need to hit to retrieve the values

- How to store the values retrieved into an object

- How to create a file with that object

- We improved the code making it dynamically by letting our users type the name of the band and storing that into a file with the band’s name as file name.

Python Web Scraping Beautifulsoup Tutorial Python3

I’m really proud of you by reaching to the end of this tutorial.

Python 3 Web Scraping

Right now we are scraping just one page. Wouldn’t be great to learn how to scrape all the pages?

Python Web Scraping Beautifulsoup Example Interview

Now you can! How to get to the next page on Beautiful Soup

Contact me: DavidMM1707@gmail.com

Using Beautifulsoup Python

Keep reading more tutorials

Comments are closed.